By Ted G. Lewis

Background

The Federal Bureau of Investigations (FBI) reports of a dozen physical attacks on the Internet backbone near Sacramento, California highlight the sad state of physical security of telecommunications and policies to cope with threats to the communications sector. Sacramento is an intermediary in the Internet backbone carrying East-West traffic—it connects Chicago and San Francisco hubs, and ultimately links the West Coast with the remainder of the US and Europe. No wonder the bad guys physically cut the fiber optic cable near Sacramento—it is a critical link of the most highly traveled cables in the US.

A popular misconception is that the physical Internet is among the most resilient infrastructures in modern society. After all, TCP/IP was designed in the 1970s to withstand a nuclear attack. This claim is based on the mistaken belief that the physical Internet contains many alternate routes. When a break on one route is sensed, information is simply re-routed through a redundant alternate path. Unfortunately, this is no longer true in general, due to the 1996 Telecommunications Act, which creates incentives for cost-savings through resource sharing and requires peering—factors that are shaping the physical Internet. (Peering is the act of sharing networks across vendors.)

A slow but relentless self-organized criticality (SOC) is weakening the communications sector, and the 1996 Telecommunications Act is the major factor contributing to SOC. If the trend continues, the sector will become even more fragile due to the emergence of a limited number of highly connected hubs (Tier-1 ISPs or Autonomous Systems). Destruction of these super hubs (via physical or cyber attacks) holds the potential for dismantling the entire sector with catastrophic consequences.

What Is Happening?

Over the past 20 years—since the Telecommunications Act of 1996—the physical resiliency of the Internet has slowly eroded because of bad policy and cost-benefit economics. The 1996 Act requires Internet Service Providers (ISPs) to peer, or share each other’s networks. While intended to encourage competition, the unintended consequence is a dramatic increase in vulnerability as operators combine servers and cable operators to peer and drive down their operating costs. In fact, the National Security Telecommunications Advisory Committee (NSTAC) declared the peering facilities—called carrier hotels—the number one vulnerability of the communications infrastructure:

“The current environment, characterized by the consolidation, concentration, and collocation of telecommunications assets, is the result of regulatory obligations, business imperatives, and technology changes.”[1]

Carrier hotels are a dangerous consequence of regulation, with potentially national impact if one or more should fail:

“…Loss of specific telecommunications nodes can cause disruption to national missions under certain circumstances.”[2]

Second, economics plays a major role in weakening the sector. As the Internet becomes increasingly commercialized and regulated, it also becomes increasingly efficient and cost-effective. ISPs like AT&T, Level 3, Verizon, Hurricane Electric, Comcast, and Time Warner eliminate redundancy in the name of efficiency and profitability. What is wrong with efficiency? Efficiency translates into lower redundancy. Lower redundancy means more vulnerability. And more vulnerability means more risk to hacks, outages, and exploits.

For the past decade, ISPs have been putting more eggs into fewer baskets—fewer ISPs and fewer cables carry the global load. Even if ISPs don’t reduce the number of paths available in the physical cabling of the Internet, extremely critical blocking nodes inadvertently appear. Blocking nodes are nodes that segment the Internet into disjoint islands, if removed. They are the most critical of all autonomous systems.[3]

The presence of blocking nodes means the Internet can be severed into disconnected pieces by removing a single node. For the example used here— AS13579 with 37448 links, this means removal of any one of 2,006 AS nodes can segment the Internet into disjoint and non-communicating sub-networks. Removal of a fraction of these critical nodes would have catastrophic consequences.

It is impossible to determine if a node is a blocking node simply by looking at the massive AS-level network.[4] Instead, a very time-consuming algorithm is required to determine if a node is a blocking node. The full AS13579, 37448 Internet containing 13,579 autonomous systems (nodes) and 37,448 peering relations (links) contains 2,006 (14%) blocking nodes—a result that takes hours of computation to determine. The 2015 AS-level Internet containing 52,687 nodes and 461,337 links has not been fully analyzed to determine the number or position of critical blocking nodes, but if only 1% of the AS-level servers are blocking nodes, then each of over 500 servers can segment the Internet.

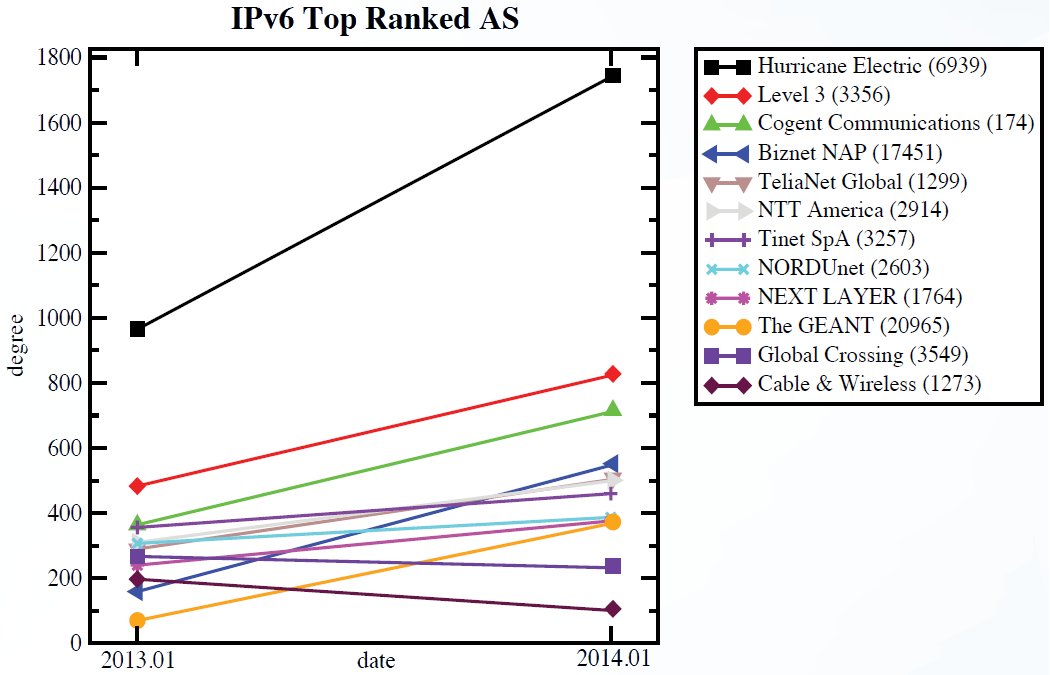

Now, add the efficiency required of expensive backbone networks to the model. Rather than increase the number of links, Tier-1 ISPs increase the bandwidth (or number of cables) of fewer links—for economic reasons. This creates a self-organizing feedback loop: fewer links are used by more ISPs, increasing the hub size (degree) of autonomous systems, and creating more demand for bandwidth because of preferential attachment. Figure 1 shows evidence of this feedback loop in action between 2013 and 2014 when CAIDA measured and reported the changing degrees (number of links) of the top 12 backbone providers. The degree of highly connected systems like Hurricane Electric generally increased, while the degree of less-connected systems stayed the same or decreased. In other words, high bandwidth and high connectivity attracts more use, which attracts more bandwidth and connectivity, which attracts more use. This self-organizing feedback loop leads to fewer, but larger-capacity, autonomous systems and physical connections. Fewer links means less resilience.

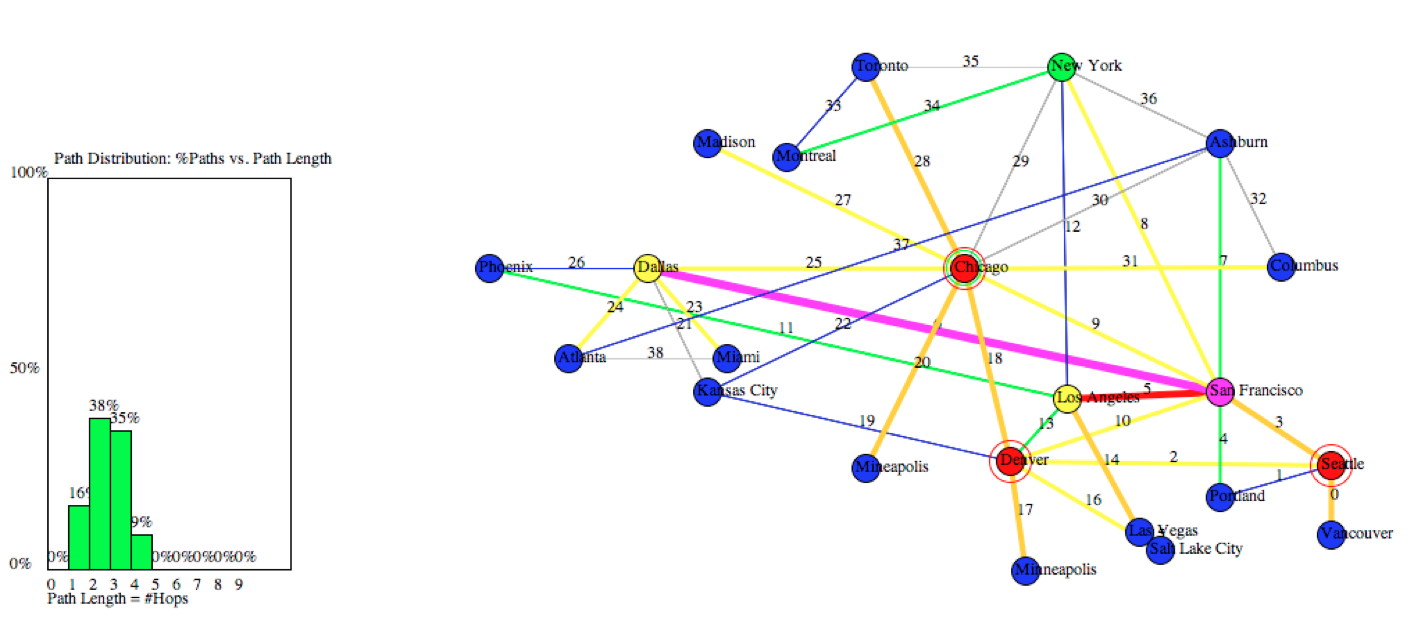

As an example, the Hurricane Electric physical backbone was analyzed for critical nodes and links (see Figure 2). As expected, the Hurricane Electric network suffers from the same self-organizing feedback loop—most traffic depends on only a few links, and there are three blocking nodes. These are the critical links and nodes of the physical infrastructure. As this network is optimized to carry more traffic on the most-used links, vulnerability of the entire network to targeted attacks only becomes worse.

The bottom line: the physical Internet is evolving away from resiliency towards fragility. Think of it as the Interstate Highway system where a major freeway is removed every few months to cut costs and optimize traffic by routing more and more traffic through the most-traveled cities and freeway links. Even if more lanes are added to the overloaded roads, the fact that there are fewer roads leads to bigger catastrophic failures when one is blocked.

What to do?

What should we do? The first step in securing the Internet is to reverse regulatory policy so that it rewards and encourages physical redundancy. The private sector must be motivated to increase redundancy and resiliency even when it costs more. Improvement in resilience is good for business—who is not in favor of better resilience? But, current regulatory policy forces the private sector to do the wrong thing.

The public sector (government) must enact smarter regulation, but also come up to speed on how the Internet actually works. The idea that the Internet is an open unfettered free market is false. The net neutrality decision recently made by the FCC doesn’t open and free the Internet – rather, it captures and regulates it. The current policy of an FCC-regulated Internet will have the same effect on the Internet as the 1996 Telecommunications Act had on the communications industry—it will increase vulnerability—through a number of unintended consequences.

About the Author

Ted Lewis co-founded the Center for Homeland Defense and Security at the Naval Postgraduate School, Monterey, CA., in 2003 (www.CHDS.us) and served as its executive director until 2013. He is the author of Critical Infrastructure Protection in Homeland Security: Defending a Networked Nation (2015 2nd ed.), the standard graduate-level textbook on critical infrastructure. He has published widely on the topic of critical infrastructure systems as complex adaptive systems and is a student of Per Bak’s theory of self-organization. He can be contacted at [email protected].

[1] National Security Telecommunications Advisory Committee, Vulnerabilities Task Force Report Concentration of Assets: Telecom Hotels, Department of Homeland Security, Feb. 12, 2003, 1, available at https://www.dhs.gov/sites/default/files/publications/Telecom%20Hotels_2.pdf.

[2] Ibid. at 3.

[3] Ted G. Lewis, Critical Infrastructure Protection: Defending a Networked Nation, 2nd ed. (Hoboken, NJ; John Wiley & Sons, 2015).

[4] Brad Huffaker, KC Claffy, Young Hyun, and Matthew Luckie, “IPv4 and IPv6 AS Core: Visualizing IPv4 and IPv6 Internet Topology at a Macroscopic Scale in 2014,” Center for Applied Internet Data Analysis, last modified Jan 6, 2015, http://www.caida.org/research/topology/as_core_network/2014/.

[5] Ibid.