Developing a critical infrastructure’s aptitude for resilience requires proper concepts and definitions as well as good governance.[1] This short paper presents seven lessons about resilience of critical infrastructures (CI) that imply a need for more adequate related standards.

The first lesson that must be brought forward is that beyond the wide variety of its definitions, resilience can be simply defined as a sociotechnical system’s aptitude to withstand incidents from the merest to the most extreme ones.[2] It stems from five activities, social processes,[3] at three stages:

- Before incidents happen: the system (people, organizations, systems, constructions) is built to avoid and resist expectable challenges and to stand unexpectedness;

- While incidents happen: the system a) maintains its missions despite adversity, b) resists its possible destruction, c) recovers a nominal course of life as soon as possible;

- After incidents happened: the system learns from and adapts to circumstances.

These processes need to be built-in within organizations. Their absence has adverse effects, like in the construction industry where disaster management thinking should be embedded in engineering processes and competencies to contribute to improvements of territorial and community resilience, but alas are not.[4]

The second lesson is that one of the key phenomena observed in the presence of incidents of any intensity is the endangered system’s degradation.[5]

Katrina is a good example of how the sea surge and wind strikes killed people and brought the New Orleans community, its infrastructures and even the emergency response command chain down to their knees. The same was observed when aircraft hit the World Trade Center, destroying lives, activities and infrastructures, even those thought to be resilient like telecommunications and the Internet. At a more micro scale of the individual, the 1949 Mann Gulch disaster also illustrates how the subject’s capacity to act, margins of safety and margins of maneuver collapse under critical circumstances and how much (“peritraumatic”) resilience is a struggle to stay alive.[6]

The “Collapse Ladder” model[7] identifies five levels of degradation that measure[8] how much systems are affected by incidents:

- At level 1 of the Collapse Ladder (disruption), only components trip or are disturbed;

- At level 2 (destabilization), entire subsystems collapse or are disturbed, or essential connections (for example a peering link, the Home Location Register, cables, etc.), or key supplies such as electricity or cooling water;

- At level 3 (paralysis), the whole system shuts down and the support functions (from maintenance to network management through customer management for instance) are practically unable to do anything to get out of trouble, decision-making is reduced to organizing survival;

- At level 4 (devastation), infrastructures are destroyed, the command chain is outside operators’ remit;

- At level 5 (destruction), the damage is total, the system – environment relationship itself is destroyed.

The third lesson is that resilience is required, today more than ever, because of the world’s current level of complexity.

On a macro level, threats are interdependent[9] thus creating unpredictable environments and risks for social and economic activities.[10]

On a “mezzo”, intermediate level it is the complexity of critical infrastructures’ interdependencies[11] that must attract our attention. When looked at a finer level of detail[12] it appears that critical systems and facilities are bound together by an unthinkable number and variety of physical and technical links, wires, exchanges, etc., making it impossible to map them out accurately and reliably.

And finally, the phenomena that may result from major incidents like 9/11 are also unpredictable in terms of the ways they unveil, of their possible knock-on effects[13]. In part, this is due to the existence, or to the “poietic”[14] emergence,[15] of “propagation vectors”.[16] These are stand-alone or combinations of social, technical or physical channels that, timely available, intervene in the process of accidentogenesis to open new ways for an incident to impact the world in a new fashion. For instance, in the 9/11 case, debris of the North Tower collapsing at 10:28 hit the Verizon building, which then getting flooded, loses its power supply and the telecommunication equipment it hosted. The likeliness of the combination of the two buildings’ proximity with debris falling from one of them cannot be estimated. Of course, it could have been envisaged but was not. And such a vector surprisingly brings about the fall of telecommunications, the Internet, etc.

If there is no proper way of predicting the unpredictable, some “archeology” and red teaming risk-focused reviews of systems’ designs depicted in long-buried system documentation are a minimal precaution to raise the awareness of potential propagation vectors. However, the limit is quickly reached as past investments are impossible to undo.

The fourth lesson is that resilience being an aptitude of sociotechnical systems it is an ambition, a goal that managers, engineers and other staff must undertake collectively and individually. But how can we express such a goal?

When on November 2009 the new “Telecom Package”[17] was released by the European Commission, its article 13a came as a surprise when it stipulated that public communications networks and publicly available electronic communications services operators “notify the competent national regulatory authority of a breach of security or loss of integrity that has had a significant impact on the operation of networks or services” in each Member State. What were those significant incidents like?

The study we performed at the time[18] quickly discarded the old risk matrix (likeliness * damage) for a more flexible classification of incidents along two scales:

- The “impact” of the incident (Negligible: Customers, users, and client systems are not affected; Tolerable: Customers, users or client systems are affected within given limits; Intolerable: Customers, users or client systems are affected beyond specified limits);

- The “mode of control” of the situation (Procedure-based: diagnosis & repair procedures or a BCP/DRP; Creative: inventing ad hoc solutions or making tactical decisions).

These two criteria define six classes of incidents, the last four being deemed significant as they belong in the category of emergencies whereas the first two are everyday incidents. And it was admitted that these classes correspond to six levels along a “Resilience scale” calling for specific mechanisms:

| Resilience Scale | Typical Response Mechanisms |

| R6- Extreme shock | Crisis Management Mechanism & Contract Management |

| R5- Severe shock | Crisis Management Mechanism |

| R4- Severe incident | Business / ICT Continuity Plans & Contract Management |

| R3- Major incident | Business / ICT Continuity Plans |

| R2- Minor incident | Creative Solutioning & Lesson Learning |

| R1- Minor event | Supervision, Diagnosis & Repair Procedures |

Aiming for level R6 is a goal, an ambition that requires that all needed measures needed at lower levels be in place and operational, hence making resilience the fruit of a “cumulative engineering”.

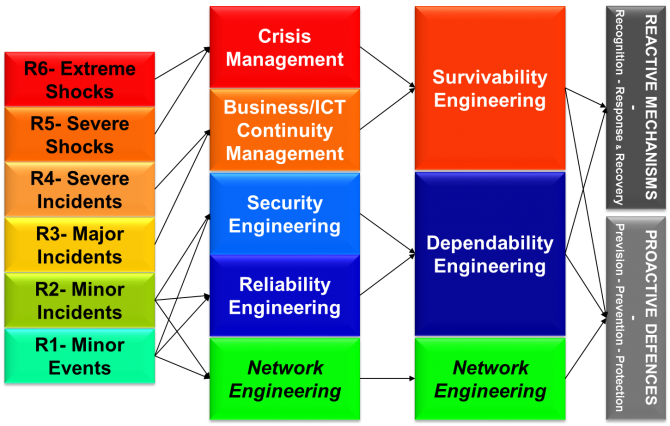

The fifth lesson about this cumulative engineering of resilience is that it mobilizes all known risk reduction disciplines[19]:

Under the umbrella of risk management, resilience engineering contributes to delivering six types of mechanisms as per the P3R3 model[20]: Prevision (of threats, analysis & anticipation), Prevention (of threats when feasible), Protection (against residual threats not reduced at source nor deterred), Recognition (of incidents when happening), Response (to incidents), Restoration (afterwards).[21]

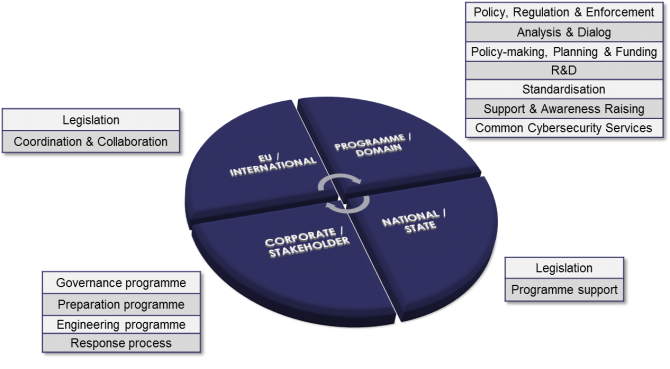

The sixth lesson is the need for governance because combining these disciplines requires strong commitments and clear directions in a complex multi-stakeholder, cross-border environment of interdependencies. The “Multilevel Governance Framework” presented in details the levels (international, Domain, National, Corporate) and fifteen generic activities that rule critical infrastructures’ security/protection in the US and EU.[22]

Within this framework, resilience engineering comes as the result of combined efforts from the four levels.

The seventh lesson comes as a request for more adequate standardization.

We need that global, multilevel resilience governance and engineering framework to articulate the levels, activities and elements contributing to making critical infrastructures resilient.

Therefore standardization must create the resilience management and engineering standards that this need implies. It must turn the page of old BCM and take better account of new ad hoc scientific knowledge. And as the latter is still in construction, standards must allow the margins of maneuver managers need to progress at an optimal pace.

Resilience is an aptitude that transcends the present, involving a permanent rework of concepts as well as of hands-on measures taken on the ground. It also transcends the divide between academics and practitioners.

And ISO TC292 must now create the clear, adequate standards, concepts and vocabulary that will help, not deter, professionals and managers.

Paul Théron, PhD, FBCI, is co-director of the French “Aero Spatial Cyber Resilience” research chair. His work on resilience includes: resilience governance; the (cyber) resilience of massively collaborative systems such as ATM or Telecommunications infrastructures; and Multi Intelligent Agent Systems for Cyber Defense. Former member of ENISA’s Permanent Stakeholders Group, he also leads DG JRC’s European Thematic Group on the “Certification of the cybersecurity of Industrial Automation & Control Systems” and has been teaching resilience in French and Swiss universities for the past ten years.

References

[1] L. Paul Lewis, John R. Hummel, & Ignacio Martinez-Moyano, “Incorporating the Rule of Law in Resiliency Analyses,” The CIP Report 14, no. 6 (Jan. 2015): 6-10, available at https://cip.gmu.edu/wp-content/uploads/2013/06/150_The-CIP-Report-January-2015_Resilience.pdf.

[2] Paul Theron, “ICT Resilience as Dynamic Process and Cumulative Aptitude,” in Critical Information Infrastructure Protection and Resilience in the ICT Sector, ed. Pault Theron and Sandro Bologna (Hershey, PA; IGI Global, 2013).

[3] Yrgö Engeström, “Activity Theory as a Framework for Analyzing and Redesigning Work,” Ergonomics 43, no. 7 (2000): 960-974, available at http://courses.ischool.berkeley.edu/i290-3/s05/papers/Activity_theory_as_a_framework_for_analyzing_and_redesigning_work.pdf.

[4] Bingunath Ingirige, “Theorizing Construction Industry Practice within a Disaster Risk Reduction Setting: Is it a Panacea or an Illusion?” Construction Management and Economics 34, no. 7-8 (2016): 592-607, available at http://www.tandfonline.com/doi/full/10.1080/01446193.2016.1200735.

[5] As in James P.G. Sterbenz, et al., “Resilience and Survivability in Communication Networks: Strategies, Principles and Survey of Disciplines,” Computer Networks 54, No. 8 (June 1, 2010): 1245-1265, available at http://www.sciencedirect.com/science/article/pii/S1389128610000824; or Chris Hall, Richard Clayton, Ross Anderson, and Evangelos Ouzounis, “Inter-X: Resilience of the Internet Interconnection Ecosystem,” (ENISA, 2011).

[6] Norman MacLean, Young Men and Fire, (Chicago: Chicago University Press, 1993); or Paul Theron, “Lieutenant A and the Rottweilers: A Pheno-cognitive Analysis of a Fire-fighter’s Experience of a Critical Incident and Peritraumatic Resilience” (thesis, University of Glasgow, 2014), http://theses.gla.ac.uk/5146/.

[7] Theron, “ICT Resilience as Dynamic Process and Cumulative Aptitute.”

[8] European Commission, DG JLS, “A Study on Measures to Analyse and Improve European Emergency Preparedness in the Field of Fixed and Mobile Telecommunications and Internet,” Study EC JLS/2008/D1/018 (2011), available at http://ec.europa.eu/dgs/home-affairs/e-library/docs/pdf/2010_study_telecommunications_and_internet_en.pdf.

[9] World Economic Forum, Global Risks Report 2016 (Geneva; World Economic Forum, 2016), available at http://www3.weforum.org/docs/Media/TheGlobalRisksReport2016.pdf.

[10] Nik Gowing and Chris Langdon, Thinking the Unthinkable: A New Imperative for Leadership in the Digital Age (London: CIMA, 2015), available at http://thinkunthinkable.org/downloads/Thinking-The-Unthinkable-Report.pdf.

[11] Peter Sommer and Ian Brown, Reducing Systemic Cybersecurity Risk (Washington, D.C.:OECD, 2011), available at https://www.oecd.org/gov/risk/46889922.pdf.

[12] Steven M. Rinaldi, James P. Peerenboom, and Terrence K. Kelly, “Identifying, Understanding, and Analyzing Critical Infrastructure Interdependencies,” IEEE Control Systems Magazine, Dec. 2001, http://www.ce.cmu.edu/~hsm/im2004/readings/CII-Rinaldi.pdf.

[13] Theron, “ICT Resilience as Dynamic Process and Cumulative Aptitute.”

[14] Merriam-Webster Online, s.v. “-poietic,” accessed Aug. 8, 2016, http://www.merriam-webster.com/dictionary/poietic (adj. combining form, “productive: formative <hematopoietic>”).

[15] Volker Grimm and Uta Berger, “Structural Realism, Emergence, and Predictions in Next-Generation Ecological Modelling,” Ecological Modelling 326 (Apr. 24, 2016): 177-187, available at http://www.sciencedirect.com/science/article/pii/S0304380016000077.

[16] Theron, “ICT Resilience as Dynamic Process and Cumulative Aptitute.”

[17] Directive 2009/140/EC, of the European Parliament and of the Council of 25 November 2009, 2009 O.J. (L 337) 37-69, available at http://eur-lex.europa.eu/LexUriServ/LexUriServ.do?uri=OJ:L:2009:337:0037:0069:EN:PDF.

[18] European Commission, DG JLS, “A Study on Measures to Analyse and Improve European Emergency Preparedness in the Field of Fixed and Mobile Telecommunications and Internet.”

[19] Paul Theron, “Towards a General Theory of Resilience. Lessons from a Multi-Perspective Research,” (lecture/presentation, ERNCIP Training for Professionals in CIP: From Risk Managemnet to Resilience, Brussels, 2016), available at https://halshs.archives-ouvertes.fr/cel-01342846v2.

[20] Ibid.

[21] This model compares to Deborah J. Bodeau and Richard Graubart, “Cyber Resiliency Engineering Framework,” Document No. MTR110237 (Bedford, MA: MITRE, 2011), available at https://www.mitre.org/sites/default/files/pdf/11_4436.pdf; and Sterbenz et al., “Resilience and Survivabilityin Communications Networks.”

[22] Paul Theron, “Towards a General Theory of Resilience.”